dummy_service

fluent.conf

config file: WriteToMongo/fluent/fluent.conf

edit

<match debug.*>

@type stdout

</match>

<source> (1)

@type tail

path /var/log/*.log

path_key tailed_path

pos_file /tmp/fluentd--1605454018.pos

pos_file_compaction_interval 30s

refresh_interval 30s

skip_refresh_on_startup

tag stats.node

enable_stat_watcher false

enable_watch_timer true

read_from_head true

follow_inodes true # Without this parameter, file rotation causes log duplication

<parse>

@type none

keep_time_key true

</parse>

</source>

# define the source which will provide log events

<source> (1)

@type tail (2)

path /var/log-in/*/* (3)

path_key tailed_path

pos_file /tmp/fluentd--1605454014.pos (4)

pos_file_compaction_interval 10s

refresh_interval 30s

skip_refresh_on_startup

tag log.node (5)

enable_stat_watcher false (6)

enable_watch_timer true (7)

read_from_head true

follow_inodes true # Without this parameter, file rotation causes log duplication

<parse>

@type none (8)

keep_time_key true

</parse>

</source>

## match tag=log.* and write to mongo

<match log.*> (9)

@type copy

copy_mode deep (10)

<store ignore_error> (11)

@type mongo (12)

connection_string mongodb://mongo.poc-datacollector_datacollector-net:27017/fluentdb (13)

#database fluentdb

collection test

#host mongo.poc-datacollector_datacollector-net

#port 27017

num_retries 60

capped (14)

capped_size 100m

<inject>

# key name of timestamp

time_key time

</inject>

<buffer>

retry_wait 50s

flush_mode immediate (15)

#flush_interval 10s

</buffer>

</store>

<store ignore_error>

@type stdout

</store>

<store ignore_error>

@type file

path /tmp/mylog

<buffer>

timekey 1d

timekey_use_utc true

timekey_wait 10s

</buffer>

</store>

</match>| 1 | <source> directives determine the input sources. The source submits events to the Fluentd routing engine. An event consists of three entities: tag, time and record. |

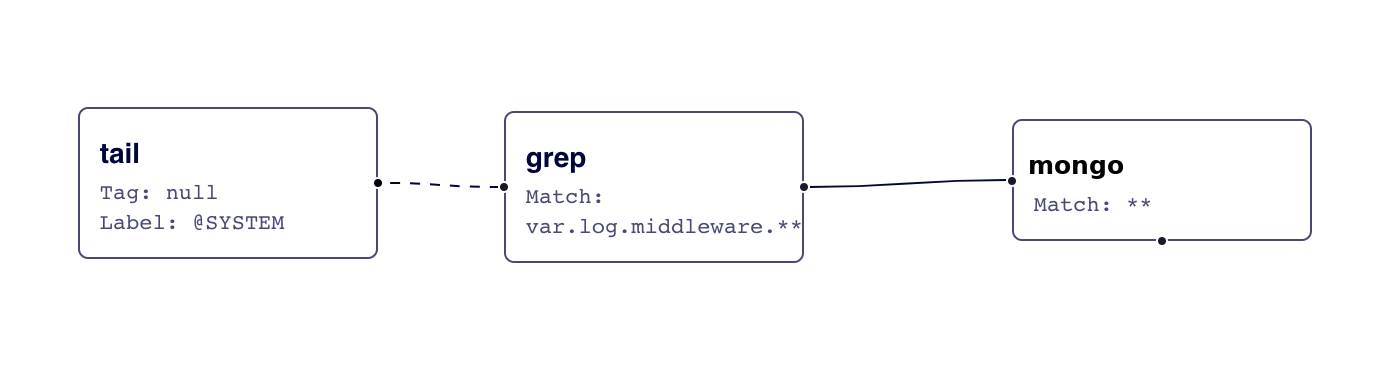

| 2 | The tail Input plugin allows Fluentd to read events from the tail of text files. Its behavior is similar to the tail -F command. see image: type tai |

| 3 | The path(s) to read. Multiple paths can be specified, separated by comma ','. '*' format can be included to add/remove the watch file dynamically. At the interval of refresh_interval, Fluentd refreshes the list of watch files. |

| 4 | pos_file handles multiple positions in one file so no need to have multiple pos_file parameters per source. Don’t share pos_file between tail configurations. It causes unexpected behavior e.g. corrupt pos_file content. |

| 5 | The tag of the event. |

| 6 | Enables the additional inotify-based watcher. Either of enable_watch_timer or enable_stat_watcher must be true |

| 7 | Enables the additional watch timer. Either of enable_watch_timer or enable_stat_watcher must be true |

| 8 | The none parser plugin parses the line as-is with the single field. This format is to defer the parsing/structuring of the data. |

| 9 | <match> directives determine the output destinations. The match directive looks for events with matching tags and processes them. The most common use of the match directive is to output events to other systems. |

| 10 | Chooses how to pass the events to <store> plugins. deep copied events to each store plugin. This mode is useful when you modify the nested field after out_copy, e.g. Docker Swarm/Kubernetes related fields. |

| 11 | Specifies the storage destinations. The format is the same as the <match> directive. This section is required at least once. |

| 12 | The mongo Output plugin writes records into , the document-oriented database system. |

| 13 | The MongoDB connection string for URI. |

| 14 | This option enables the capped collection. This is always recommended. Capped collections are fixed-size collections that support high-throughput operations that insert and retrieve documents based on insertion order. Capped collections work in a way similar to circular buffers: once a collection fills its allocated space, it makes room for new documents by overwriting the oldest documents in the collection. |

| 15 | Flushing Parameters: |

Figure 1. type tail